Projects

Here are some ongoing research directions of the group. Openings for curious students at all levels!

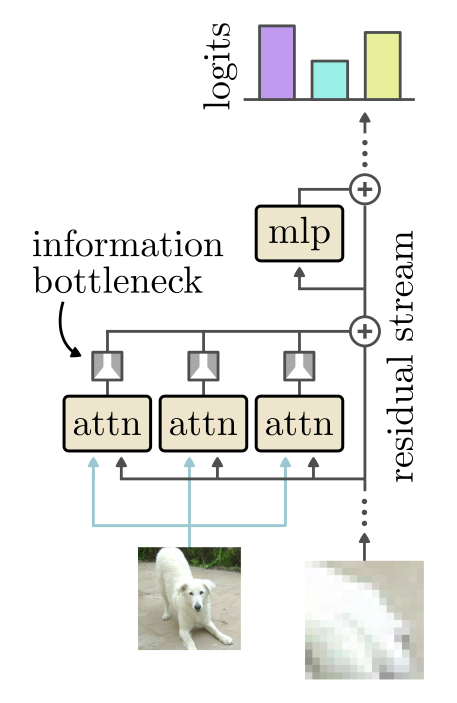

How do transformers process information?

Transformers aggregate information from many pieces – language fragments, image patches, temporal intervals, etc. – into a global representation of the whole. In this project, we restrict the flow of information between pieces as they are processed by a transformer, and gain an entirely new perspective on the way transformers build up from local to global.

Research highlights:

- From independent patches to coordinated attention: Controlling information flow in vision transformers, arXiv 2026.

This project builds upon several recent publications that use the distributed information bottleneck to restrict and monitor information in composite systems:

Probabilistic representation learning: engineering how information is stored in latent spaces

Deep learning layers transformations on top of one another, turning data into representations that twist and contort into something useful (hopefully). We’d love to be able to measure how similar the representations of two networks are, just as we’d love to have more control over the nature of representations. It turns out that both become easier if you force representations to be probability distributions, and view latent spaces as communication channels.

Research highlights:

Information games

What happens in multi-agent scenarios when information is not a means to some other end, but rather the ultimate objective itself?

Do effective strategies for deceit and for efficient sensing arise naturally?

Using tools we’ve developed to characterize the nature of distributed information, we are studying how agents acquire information and deceive their opponents.